Vultr has introduced a new line of higher-performance cloud instances built on AMD’s EPYC processor family, signaling a broader industry shift toward more transparent and performance-focused chip disclosures in cloud services. The new offering centers on dedicated virtual CPUs that are not shared between instances, delivering more predictable performance and improved efficiency for compute-intensive workloads. This strategic move comes as cloud providers increasingly highlight the capabilities and distinctions of their chip architectures, moving away from earlier generations that were often treated as commodity components. The change reflects a broader trend: customers now expect to understand the hardware beneath their cloud services and to see measurable differences in speed, latency, and cost efficiency. While the EPYC-based machines are presented as a step toward more affordable, high-performance computing, Vultr’s claims about performance gains and pricing remain subject to the specifics of each workload, but they point to a deliberate reorientation from generic infrastructure toward hardware-aware cloud engineering.

AMD EPYC-Powered Instances: A New Era for Vultr

Vultr’s launch of AMD EPYC-powered instances marks a meaningful departure from the company’s earlier messaging, which was anchored in Intel-based infrastructure. Previously, Vultr highlighted “100% SSD and high-performance Intel CPUs” as the core strengths of its platform, a framing that positioned the company within a familiar, Intel-dominated performance narrative. With the new AMD-based lineup, Vultr is emphasizing dedicated virtual CPUs and the absence of CPU-sharing between instances, which translates to more consistent performance for applications that are sensitive to processor contention. The shift aims to address workloads that demand predictable throughput, such as real-time analytics, high-frequency trading simulations, batch processing with tight latency envelopes, and compute-heavy scientific or engineering tasks where fluctuations in CPU availability can undermine results. The dedicated CPU model reduces noisy neighbors and contention-induced latency, a pain point that has long influenced enterprise perceptions of multi-tenant cloud environments.

In addition to the CPU architecture change, Vultr is pursuing enhancements at the storage layer to further minimize bottlenecks. The new machines are designed to pair EPYC CPUs with flash-based storage that leverages NVMe (Non-Volatile Memory Express) interfaces, a technology choice intended to provide substantially higher input/output operations per second (IOPS) and lower latency than traditional SATA-based SSD configurations. By integrating NVMe storage, Vultr aims to reduce disk-bound bottlenecks that often throttle data-intensive workloads, improving throughput for applications such as large-scale data processing, machine learning preprocessing, in-memory caching at large scales, and high-concurrency web services that require rapid access to rapidly changing data. The engineering intent behind these design choices is to create a more balanced, end-to-end performance profile where CPU, memory bandwidth, and storage I/O work in concert rather than in competition.

The company asserts that the new AMD EPYC-based instances will deliver a substantial uplift in performance relative to Vultr’s predecessor generations. Management and engineering teams have suggested that the new configurations could deliver about a 40% improvement in speed over earlier instances, a figure that would be meaningful across a wide range of CPU-bound tasks. They also project that the price-to-performance ratio will improve, with estimates suggesting a 10% to 50% uplift in cost efficiency when compared to larger cloud providers for comparable CPU-heavy workloads. It is important to recognize that these figures are estimates tied to specific workloads and usage patterns; the actual benefits may vary depending on the particular application, data transfer patterns, memory usage, and software stack. Nonetheless, the emphasis remains on delivering greater predictability, more robust performance headroom, and a more compelling value proposition for developers and enterprises seeking efficient, scalable cloud compute.

A notable technical emphasis in Vultr’s messaging is the allocation of dedicated virtual CPUs rather than shared CPUs among tenants. This architectural choice is designed to mitigate the effects of “noisy neighbors,” a common problem in multi-tenant cloud environments where one customer’s heavy workload can degrade the performance experienced by others. By isolating CPU resources at the virtualization layer, Vultr aims to provide tighter performance envelopes and more deterministic behavior under load. The architectural decision aligns with broader industry moves toward containerization and virtualization strategies that prioritize performance isolation, cache locality, and predictable scheduling. For workloads that rely on consistent scaling, such as batch processing pipelines, streaming data analytics, or real-time inference, the expected improvements in predictability can translate into lower engineering risk and simpler capacity planning.

From a storage perspective, the integration of NVMe-based disks represents another deliberate optimization. NVMe is designed to exploit the full capabilities of modern solid-state drives, delivering higher IOPS and lower latencies than legacy storage technologies. In practical terms, this can translate into faster data ingestion, reduced time-to-insight for analytics tasks, and snappier boot and deployment times for compute-heavy services. Vultr’s approach here is to align the storage subsystem with the performance promises of the EPYC CPU family, creating a more cohesive platform for data-intensive workloads. While the exact gains will depend on data characteristics, query patterns, and the specific software stack, the combination of dedicated CPUs and NVMe storage is intended to produce a conspicuously more consistent and higher-performing environment for customers who need reliable, scalable compute.

In communications with the market, Vultr’s leadership has stressed that their AMD transition is part of a broader strategy to diversify the hardware foundation of their cloud services beyond Intel. The stated rationale includes the ability to offer more affordable alternatives to established cloud options while delivering competitive performance metrics. The company’s executives have described the AMD-based initiative as a milestone in their ongoing efforts to bring high-performance computing within reach of developers and organizations that require robust compute resources without sacrificing cost efficiency. The narrative suggests a broader industry pattern where cloud providers increasingly foreground hardware distinctions as a differentiator, moving beyond generic infrastructure to hardware-aware service design.

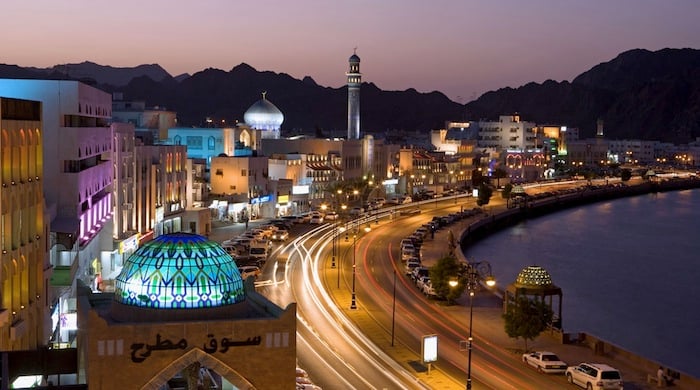

To support these claims, Vultr has communicated that the new AMD EPYC instances are intended to be deployed across its global footprint, with plans to scale availability in multiple regions. The company’s data center strategy aligns with the aim of broad geographic coverage, making the AMD-based offerings accessible to users around the world who need lower latency and better performance from compute-intensive workloads. The operational implications of such expansion include more consistent regional performance, improved data locality for applications that require proximity to end-users, and the potential for regional optimization of network ingress and egress costs. In short, the AMD-based line is positioned not only as a performance upgrade but also as a strategic device to broaden Vultr’s reach and depth of service across its worldwide data center network.

As with any technology shift of this scale, the rollout will proceed in phases, with ongoing validation across a spectrum of workloads to confirm real-world benefits and to refine pricing and feature sets. Vultr acknowledges that performance gains can be workload-dependent, and as such, it emphasizes the importance of benchmarking across representative customer scenarios to establish baseline expectations. The company’s communications emphasize the value of offering customers more choice in infrastructure configurations, enabling them to tailor compute resources to specific application profiles and business objectives. The broader implication for the market is a normalization of hardware-aware cloud offerings, where customers can pair their software architectures with precisely matched hardware profiles to optimize performance and cost.

The Industry Context: From Intel to AMD and ARM-Based Chips

Within the wider cloud computing ecosystem, Vultr’s AMD-based initiative sits amid a notable shift in how cloud providers communicate and demonstrate the capabilities of their underlying hardware. In parallel with Vultr’s upgrade, major players have begun to publicize the performance differentials and architectural features of newer generations of chips. For instance, Google’s Tau VMs, which are built on AMD EPYC processors, have been highlighted for substantial performance improvements and favorable price-performance characteristics. Reports from the industry indicate that Tau VMs achieved significant gains in absolute performance and cost efficiency, signaling that the move toward AMD-based offerings is not isolated to a single provider but part of a broader industry transition.

Meanwhile, ARM-based accelerators have also taken center stage in the cloud compute conversation. Amazon’s Graviton series, built around ARM cores, has been advancing through successive generations, with claims of meaningful performance improvements and competitive price points. The Graviton3, in particular, has been described as delivering dramatic speedups relative to its Graviton2 predecessor for certain workloads—specifically, some 16-bit floating-point calculations that are common in machine learning tasks. The landscape suggests a multi-processor approach to cloud efficiency and performance: AMD’s EPYC cores provide strong multi-core, high-throughput capabilities for general-purpose and data-intensive workloads, while ARM-based Graviton chips offer advantages in energy efficiency and price/performance for targeted workloads. Across the industry, customers are increasingly exposed to these nuanced distinctions, which encourages better workload-to-hardware alignment and more informed procurement decisions.

The move away from Intel-dominated conversations also reflects a broader trend toward more transparent disclosure of hardware characteristics. In earlier years, some cloud providers positioned their offerings as “commodity compute” with limited attention to the specifics of CPU generations, core counts, or cache configurations. As workloads become more specialized and performance-driven—particularly in AI, data analytics, and high-performance computing—the relevance of precise hardware details has grown. Enterprises now seek to understand and optimize the interaction between software and hardware, including how CPU architecture, memory bandwidth, cache hierarchy, and I/O subsystems influence model training times, inference throughput, and data processing speeds. The industry-wide emphasis on chip specifics signals a maturation of the cloud market, where customers are more discerning about performance guarantees, service-level expectations, and total cost of ownership.

This broader context also includes ongoing developments in machine learning and AI acceleration. The cloud providers’ emphasis on CPU-based performance does not exist in isolation from the accelerators and specialized hardware that accompany AI workloads, such as GPUs and domain-specific processors. Instead, it complements those capabilities by ensuring that the fundamental compute infrastructure—where preprocessing, data shaping, and orchestration take place—can sustain fast, reliable throughput. The result is a more holistic cloud compute ecosystem in which CPUs, storage, memory, and accelerators are coordinated to deliver end-to-end performance improvements. The industry is moving toward a model where customers can choose from a spectrum of hardware configurations, each optimized for different workload profiles, with clear expectations around performance, scalability, and cost.

In this evolving environment, cloud providers are also refining their messaging around total cost of ownership and performance predictability. The ability to deliver consistent performance while controlling operating costs is increasingly a critical differentiator for enterprise buyers who need reliable, scalable infrastructure for mission-critical applications. This dynamic plays into the rhetoric around dedicated virtual CPUs and NVMe storage, as customers seek configurations that minimize latency, maximize throughput, and reduce unpredictable cost spikes associated with shared resources or suboptimal I/O subsystems. The industry’s tenor suggests that consumers are more willing than ever to evaluate hardware choices in depth, and they expect cloud vendors to articulate the concrete benefits of each option, including how these choices translate into measurable outcomes for their applications and data workloads.

Vultr’s Strategic Transition: From Intel to AMD

The pivot away from Intel-centric configurations marks a strategic realignment for Vultr, reflecting a broader market willingness to diversify compute foundations. Historically, the company’s public messaging highlighted Intel-based performance characteristics, positioning its platform as a reliable, high-performance option for customers who prioritized speed and consistency. The move to AMD indicates a willingness to reframe the value proposition around architecture-specific advantages, including the potential for stronger multi-threaded performance, competitive pricing, and access to a broader ecosystem of AMD-based optimizations. This transition aligns with industry expectations that enterprises increasingly value hardware options and the flexibility to tailor infrastructure to workload requirements.

In practical terms, Vultr’s shift involves more than simply swapping CPUs. It encompasses a holistic upgrade to the underlying hardware stack, including the implementation of PCIe-enabled, NVMe-based storage that is designed to deliver higher IOPS and lower latency. The combination of EPYC processors with NVMe storage is intended to reduce disk-bound bottlenecks and improve data throughput for compute-intensive tasks. For developers and operations teams, this means smoother execution of data pipelines, faster containerized workloads, and better performance for applications that demand rapid data access and processing. The engineering intent is to create a more balanced platform where the CPU, memory subsystem, and fast storage work in concert to deliver consistent results across a variety of workloads.

From a branding and market positioning standpoint, Vultr’s strategic shift signals a broader willingness among independent cloud providers to curate a distinct hardware narrative in a market historically dominated by a handful of large players. By emphasizing independence and performance-oriented design choices, Vultr seeks to attract developers, startups, and enterprises that value transparent hardware differentiation and the ability to optimize for cost-to-performance across diverse workloads. The company’s leadership has underscored that the repositioning is part of an ongoing effort to expand global reach, improve performance characteristics for CPU-heavy computations, and maintain a competitive price point.

The operational implications of this transition include a detailed focus on performance validation, customer education, and regional rollout planning. Vultr’s teams are tasked with validating the real-world performance of AMD EPYC-based instances across representative workloads, refining deployment best practices, and communicating clear expectations around capacity planning, scaling, and potential workload-specific considerations. As with any hardware transition, the process entails iterative optimization, benchmarking against a spectrum of workloads—ranging from synthetic microbenchmarks to real-world data processing pipelines—and careful alignment with service-level objectives. The ultimate aim is to deliver a more predictable, high-performance, cost-efficient cloud platform that resonates with customers who require robust compute resources coupled with transparent hardware characteristics.

In the same breath, Vultr has signaled a commitment to storage performance as a key element of the overall value proposition. The company’s statements indicate an emphasis on deploying NVMe storage to avoid common disk-bound bottlenecks for disk-intensive software. This approach is designed to complement the CPU upgrade, ensuring the storage subsystem can meet the throughput and latency demands of modern workloads such as analytics, AI inference pipelines, and large-scale data processing. By investing in faster disks and more capable CPU architectures, Vultr is aiming to deliver a more cohesive and responsive cloud experience for developers and enterprises seeking predictable performance at scale.

The strategic shift is also accompanied by a broader conversation about workload placement and pricing strategy. Vultr’s leadership has indicated that the new AMD EPYC-based instances are expected to deliver stronger price-to-performance metrics when measured against larger cloud providers for CPU-dominant workloads. However, the company acknowledges that pricing is not a universal constant, and the benefits may vary depending on the workload mix, data-transfer patterns, and the nature of the compute tasks at hand. This measured approach to pricing underscores a practical understanding that enterprise value is not solely a function of raw speed but of sustained performance, stability under load, and the total cost of ownership across the lifecycle of an application or service.

In terms of ecosystem and partnership dynamics, the AMD transition could influence how Vultr collaborates with software developers, system integrators, and customers who rely on specific hardware optimizations. Compatibility considerations, driver availability, and support for AMD-specific features can shape the ease with which customers migrate or deploy new workloads on EPYC-based infrastructure. The company’s communications emphasize a commitment to delivering a reliable path for customers who want to experiment with AMD-powered configurations while preserving continuity for existing deployments that continue to run on Intel-based instances. The reality of a mixed hardware environment invites customers to adopt a hybrid strategy, selecting AMD EPYC for compute-centric workloads while leveraging other configurations for workloads with different performance profiles or licensing constraints.

AI Scaling and the Limits: Power, Costs, and Inference Delays

Enterprise AI scaling is shaping new constraints around power, token costs, and inference latency. As organizations push toward larger models and more sophisticated AI capabilities, the energy footprint of AI workloads has emerged as a strategic consideration. Power caps and the rising costs associated with token-based inference continue to influence how teams architect and deploy AI systems. In this context, enterprises are seeking ways to convert energy usage into a competitive advantage, optimizing the infrastructure to achieve real throughput gains while sustaining sustainable and cost-effective operations. Cloud providers are responding by introducing hardware configurations and software optimization strategies designed to maximize throughput per watt and reduce total energy consumption for AI workloads.

Architecting efficient inference pipelines has become a central objective for many enterprises. As AI models scale, the demand for high-throughput inference accelerates, with implications for latency, batch processing, and system stability. Architectural decisions now increasingly focus on designing systems that deliver consistent throughput at high utilization levels, while minimizing energy costs and avoiding bottlenecks that degrade model performance. This includes exploring optimizations at multiple layers, from data preprocessing and model optimization to allocation of compute resources and memory management. In practice, it means that teams are evaluating hardware accelerators, software frameworks, and cloud configurations in tandem to identify the most cost-effective paths to real throughput gains.

Sustainability has become a core consideration in AI infrastructure planning. Enterprises recognize that the energy efficiency of AI workloads is not only an environmental concern but also a direct factor in operational costs. Effective AI deployments require balancing the need for rapid inference with the energy required to sustain such performance, including the power draw of CPUs, memory, storage systems, and networking components. Cloud providers and enterprises alike are investing in innovations that reduce energy consumption, such as more efficient processors, better thermal management, and optimized data placement that minimizes movement and energy-intensive data transfers. The net effect is a more holistic view of AI systems, where hardware selection, software optimization, and energy strategy are tightly interwoven to deliver scalable, cost-effective outcomes.

The discussions around AI scaling also emphasize the importance of predictable cost structures. Token costs, model inference pricing, and data transfer charges influence how teams budget for AI workstreams. As workloads grow, small differences in price per token or per operation can compound into significant cost variances over time. Consequently, organizations are increasingly prioritizing transparent pricing models and clear performance baselines to ensure that AI initiatives deliver the expected return on investment. Cloud providers that offer a transparent view of hardware capabilities and performance benchmarks help customers make more informed decisions about which configurations to adopt for AI workloads and how to scale them responsibly as demand grows.

In this broader AI context, the role of independent cloud providers—such as Vultr—gains additional significance. By offering hardware options that emphasize dedicated compute and fast storage, these providers can help organizations optimize AI workflows without being locked into the more centralized, feature-rich but potentially more expensive portfolios of the largest players. The independence of such providers often translates into flexible pricing, straightforward service agreements, and a focus on core compute-and-storage performance rather than an expansive catalog of services that may not be essential to the customer’s immediate needs. For enterprises seeking efficient AI infrastructure that is both cost-conscious and performance-driven, the combination of AMD EPYC-based compute with NVMe storage may represent a compelling option, particularly when paired with careful workload design and optimization efforts.

Deployment Details and Performance Promises

The technical rationale behind Vultr’s AMD EPYC-based instances centers on a deliberate pairing of high-core-count CPU cores with fast storage and strong memory bandwidth. The EPYC architecture is designed to deliver robust multi-threaded performance, which benefits workloads that parallelize well across numerous CPU cores. When matched with the NVMe-based storage stack, the result is a platform that can support large-scale data movement, high-throughput simulation, and a variety of analytics workloads with low latency. The net effect is a compute environment that is well-suited to data-centric and compute-heavy tasks that demand steady performance rather than short-lived bursts of speed.

Vultr’s stated estimates suggest a 40% improvement in performance relative to its predecessor generations, with an expectation of 10% to 50% better price-to-performance ratios compared with some of the larger cloud providers. It is important to emphasize that these figures are estimates tied to workloads and configurations, and real-world results will vary. Workload characteristics such as memory bandwidth requirements, cache usage patterns, vectorized computation capabilities, and data transfer profiles will influence how well users realize these improvements. Nevertheless, the promise of faster, more predictable performance is a core part of the messaging, aimed at addressing the needs of developers who require reliable compute power for complex applications and data-intensive pipelines.

The NVMe storage integration is highlighted as a differentiator. Vultr emphasizes that its offering includes NVMe storage, which is designed to outperform other SSD implementations on IOPS and latency metrics. By reducing storage-related bottlenecks, the company asserts that the overall system performance will be enhanced, particularly for workloads with high read/write intensity, large-scale data processing, or intensive caching. This claim aligns with general industry observations that high-quality storage setups can have a significant impact on the real-world performance of modern cloud workloads. It also supports the claim that enterprise-grade storage characteristics—such as consistently high IOPS, low latency, and reliable throughput—are valuable complements to high-performance CPUs in achieving end-to-end performance gains.

Customers should, however, expect variability due to the heterogeneity of real-world workloads. Vultr has been careful to flag that its performance estimates are contingent on workload type and configuration. This caveat reflects a rigorous understanding that performance is not a one-size-fits-all property; it depends on how software is written, how data is managed, how memory is allocated, and how much data needs to be moved through the system. For example, some workloads may benefit more from CPU efficiency and memory bandwidth, while others may be more sensitive to storage I/O or network throughput, depending on the pattern of data access and the structure of the application. Recognizing this nuance helps customers set reasonable expectations and pursue targeted benchmarking to measure real-world gains.

From a product strategy standpoint, Vultr has indicated that the new AMD EPYC instances will be deployed across its existing data center footprint, with plans to expand availability across the network over time. The intention is to provide broad geographic coverage so that users around the world can experience the benefits of AMD-based compute with reduced latency and improved performance. The expansion approach aligns with the company’s emphasis on geographic reach as a key lever for delivering fast, responsive cloud services to developers and enterprises that operate in diverse regions. A larger footprint also enables more efficient data localization and regional optimization of network transit, which can contribute to improved performance for applications that rely on tight latency budgets or data sovereignty requirements.

In addition to the core compute and storage improvements, Vultr has highlighted ongoing efforts to optimize software and workflow integrations with the AMD-based platform. The engineering teams are focusing on ensuring compatibility with popular Linux distributions and software stacks used by developers, data scientists, and IT operations personnel. The goal is to minimize friction in deployment and to enable a smooth migration path for customers who want to test AMD EPYC-based instances alongside their existing infrastructure. This approach reflects a practical understanding that enterprise adoption hinges on operational simplicity and clear migration guidance, rather than solely on raw hardware performance figures.

The marketing narrative for the AMD EPYC line stresses performance consistency under load, the potential for cost efficiency, and the strategic value of hardware diversity in cloud infrastructure portfolios. While performance gains are a central theme, the messaging also underscores the stability and reliability associated with enterprise-grade CPUs, memory architectures, and storage subsystems. Customers are invited to consider how these components work together to deliver predictable throughput and stable, scalable capacity for demanding compute workloads. The overarching objective is to provide a compelling alternative to larger providers by combining strong hardware performance with straightforward, developer-friendly pricing and procurement models.

Competition and the Independent Cloud Landscape

Vultr exists within a growing tier of smaller cloud providers that compete with the large incumbents by offering more aggressive pricing, simplified product stacks, and a focus on delivering solid performance with fewer frills. Named players in this segment include Digital Ocean, Backblaze, and Wasabi, among others. These independent providers can often deliver competitive price-to-performance ratios by concentrating on core compute and storage offerings and by avoiding the complexity and overhead that can accompany broader enterprise cloud portfolios. In many cases, their smaller scale and targeted focus enable them to move quickly, test new configurations, and bring new hardware options to market faster than their larger counterparts.

Vultr’s approach to competition emphasizes a global data center footprint as a key differentiator. The company reports having a network of 23 data centers around the world and outlines plans to offer the new AMD EPYC-based instances across all of these locations. The emphasis on geographic reach is intended to provide customers with broader coverage and lower latency, which can be especially important for developers and enterprises with distributed teams or global user bases. The assertion that independent cloud providers are accelerating their global presence suggests a broader industry trend toward closer-to-market infrastructure for customers who require low-latency access to compute resources.

In this competitive landscape, independent providers often position themselves on price and simplicity. They aim to offer straightforward, commoditized compute options with fewer optional features and fewer sales layers, which can translate into faster procurement and more predictable usage terms. The market positioning also highlights a strategic focus on delivering value through price-to-performance trade-offs rather than an expansive feature catalog. This stance resonates with customers who want transparent, easy-to-understand pricing and a clear, direct route to compute power without the need for extensive enterprise negotiations or lengthy onboarding processes.

Executives at Vultr have underscored that independent cloud companies can thrive by delivering a more transparent set of offerings, with less reliance on complex usage contracts or negotiable discounts that can obscure total cost of ownership. This perspective aligns with a broader movement toward product-led growth in which customers evaluate the basic compute and storage primitives directly and make purchases based on measurable performance and cost outcomes. The emphasis on a streamlined product lineup also supports the claim that small providers can maintain price discipline while continuing to invest in hardware innovations such as AMD EPYC and NVMe storage.

During the rollout discussion, Vultr highlighted a philosophy centered on delivering disruptive price-to-performance as a core strategic priority. The company’s leadership argued that the majority of users who need compute power for development, testing, and deployment want straightforward, cost-effective access to resources rather than an overly complex suite of services. In this framing, the independent providers differentiate themselves by offering core capabilities—compute, storage, and networking—at competitive prices and with an emphasis on speed to deployment and clarity of terms. The message appeals to developers and teams who value autonomy and speed, including those who prefer to shop around for compute resources to meet specific budgetary and performance requirements.

The broader implications for the market include a renewed focus on hardware-centered differentiation within the cloud ecosystem. As larger players invest heavily in AI accelerators and expansive platform services, independent providers like Vultr emphasize the value of core infrastructure—compute power, fast storage, and predictable performance—delivered in a straightforward, transparent package. The market is likely to continue seeing a mix of hardware choices, with customers assessing the trade-offs between raw CPU performance, memory bandwidth, storage throughput, and the total cost of ownership. In this environment, independent providers can carve out niches by combining competitive pricing with reliable, scalable compute, while maintaining a close alignment between hardware capabilities and customer workloads.

Business Milestones and Financing Path

Beyond the hardware and performance discussions, Vultr’s corporate narrative also includes notable milestones related to growth, revenue, and financing strategy. The parent company behind the Vultr brand, Constant, has publicly positioned itself as a bootstrapped, product-led business that has achieved substantial scale without relying on venture capital funding. This stance speaks to a broader trend among independent software and cloud providers who pursue growth by reinvesting profits and prioritizing product quality and customer retention over external capital injections. The company’s leadership has highlighted ARR milestones that underscore sustainable growth and a disciplined approach to expansion and product development.

As part of its growth story, Constant reported reaching a significant annual recurring revenue threshold while maintaining an equity capital profile that does not rely on venture funding. The leadership has framed this achievement as evidence of the viability of a bootstrapped, product-led model that can scale to hundreds of millions in ARR without external equity investment. This narrative has resonated with investors and customers who value profitability, cash flow discipline, and a path to scale that is not contingent on VC funding cycles. The emphasis on a debt-free or equity-light growth trajectory highlights a distinct alternative to the more capital-intensive growth models seen in some sectors of the tech industry.

In communications surrounding these milestones, the leadership stressed the importance of autonomous, customer-centric growth. They pointed to a business model in which product quality, reliability, and a straightforward pricing approach drive customer acquisition and retention rather than heavy reliance on marketing campaigns or complex sales structures. The aim is to offer customers a transparent, easy-to-understand value proposition that aligns with the needs of developers, small businesses, and enterprises seeking predictable, affordable cloud compute and storage. The narrative suggests confidence that a lean, customer-focused approach can sustain durable growth and allow for continued investment in hardware innovations, regional expansion, and platform improvements without the heavy pressure of external capital requirements.

The strategic emphasis on a bootstrapped, product-led approach can also shape how Constant allocates resources toward research and development, customer success, and platform improvements. Without the constraint of VC-backed burn rates, the company can pursue product-led growth strategies that prioritize user experience and performance outcomes as primary drivers of expansion. In practice, this can translate into iterative development cycles, rapid iteration on pricing and packaging, and a focus on delivering tangible value to customers through better compute performance, lower latency, and improved reliability. The combination of AMD-based compute, NVMe storage, and a disciplined approach to scaling positions Vultr as a potential long-term player in the independent cloud arena, especially for organizations that place a premium on performance, predictability, and straightforward procurement.

As this narrative unfolds, the company’s leadership remains attentive to the competitive landscape, customer demand, and the evolving economics of cloud computing. The AMD EPYC rollout is framed not merely as a hardware upgrade but as a strategic lever for broader growth, pricing discipline, and market differentiation. The independent cloud segment is likely to continue to evolve as providers optimize their hardware offerings, expand their footprints, and refine their value propositions to serve developers and enterprises seeking efficient, scalable cloud infrastructure. The historical emphasis on speed-to-market, hardware transparency, and straightforward contracts continues to inform Vultr’s approach as it navigates a landscape that increasingly rewards hardware-aware engineering and predictable performance at a compelling price point.

Market Significance and Takeaways

The introduction of AMD EPYC-based instances by Vultr underscores a broader market trend toward hardware-aware cloud services and transparent performance metrics. For developers and enterprises evaluating cloud options, the shift toward dedicated virtual CPUs and fast NVMe storage represents a meaningful step in the direction of predictability and efficiency. The trend also reflects a maturing cloud market in which customers increasingly expect to understand the underlying hardware choices that power their workloads and to see tangible evidence of performance advantages and cost efficiency. While the precise benefits will depend on workload and configuration, Vultr’s emphasis on dedicated CPU resources, NVMe storage, and a nuanced pricing narrative aligns with a growing demand for greater control over compute infrastructure and better insight into cost-to-performance outcomes.

The broader industry context, including Google’s Tau VMs on AMD EPYC and AWS’s Graviton family, suggests that multiple architectures will coexist in a diverse cloud ecosystem. Enterprises may adopt mixed-hardware strategies, selecting EPYC-based instances for workload types that benefit from robust multi-core performance and high IOPS storage, while leveraging ARM-based Graviton instances for energy-efficient or cost-sensitive workloads. This diversification allows organizations to optimize performance and cost across a spectrum of use cases, from AI training and inference to data processing, web services, and scientific simulations. The result is a cloud environment that is more capable of meeting the heterogeneous needs of modern organizations and developers.

From a competitive standpoint, Vultr’s independent positioning—emphasizing a streamlined product, straightforward pricing, and a broad footprint—offers a compelling alternative to the largest cloud providers for customers who prioritize price-to-performance and simplicity. The company’s commitment to ongoing expansion across its data center network, along with its NVMe-enabled storage strategy, positions it to attract users who value predictable performance and cost efficiency. The independent cloud segment’s emphasis on core compute capabilities—without the complexity of a sprawling ecosystem of services—appeals to developers and teams who want to focus on building applications rather than negotiating multi-layered cloud offerings.

The business milestones around Constant’s ARR and bootstrapped growth also provide a narrative of resilience and sustainable scaling. By achieving substantial ARR without external equity funding, Constant demonstrates that a lean, product-first approach can achieve significant scale while maintaining financial discipline. This milestone adds to the broader storytelling about how private, independent players can grow in a market that has historically rewarded scale and heavy capital investment with the largest incumbents. The combination of advanced hardware, global reach, and a product-led, customer-centric growth model suggests that independent cloud providers will continue to challenge established paradigms and offer viable alternatives to enterprise buyers seeking value, transparency, and performance.

Overall, Vultr’s AMD EPYC initiative reflects a convergence of hardware innovation, strategic market positioning, and operational discipline. It signals that cloud customers increasingly expect and deserve hardware-specific performance data, predictable throughput, and competitive pricing, all delivered through an independent, developer-friendly platform. As the industry continues to evolve, such moves are likely to shape the competitive dynamics of cloud procurement, pushing larger providers to respond with more transparent hardware disclosures, better performance guarantees, and pricing that reflects real-world workload characteristics. The result is a cloud market that honors both the engineering reality of complex hardware systems and the business need for efficient, scalable, and affordable compute resources.

Conclusion

Vultr’s launch of AMD EPYC-powered, dedicated-CPU instances paired with NVMe storage marks a significant shift in cloud infrastructure strategy and market messaging. By moving away from Intel-exclusive branding toward a model that emphasizes dedicated resources, predictable performance, and faster storage, the company aligns itself with a broader industry trend toward hardware-aware cloud services. The broader context includes competitive momentum from Google’s Tau VMs on AMD EPYC and AWS’s Graviton-based offerings, illustrating a landscape in which multiple architectures coexist and compete on performance and cost. Vultr positions itself as an independent, price-conscious option with a global footprint, aiming to offer a straightforward, disruptive price-to-performance proposition for developers and enterprises seeking robust compute without the complexity and premium price often associated with larger cloud providers.

The company’s strategy also encompasses a broader commitment to storage performance and a deliberate shift away from the previous Intel-centric narrative, signaling a more nuanced understanding of the complete compute stack. While performance improvements are touted, the results remain workload-dependent, underscoring the importance of benchmarking and thoughtful workload design. Additionally, Vultr’s public discourse around bootstrapped growth and substantial ARR underscores the viability of a product-led, capital-efficient model in the cloud space. As independent cloud providers continue to vie for market share through competitive pricing, architectural transparency, and global reach, the industry is likely to see continued experimentation with hardware configurations, deployment models, and performance optimization strategies that collectively push the entire market toward greater efficiency and clearer value for customers.