OpenAI and DeepSeek have each pushed notable advances in large-language-model access and reasoning capabilities, reshaping how free users interact with sophisticated AI tools. DeepSeek introduced a cost-conscious R1 model that matches OpenAI’s o1 performance, while OpenAI simultaneously rolled out its advanced o3-mini reasoning model to all ChatGPT users at no direct cost, albeit with a rate limit. This marks a significant shift in how the average user can experiment with increasingly capable AI helpers without paying for premium access. The new o3-mini is accessible through a dedicated “Reason” button positioned next to the message composer in ChatGPT, signaling OpenAI’s effort to integrate more nuanced reasoning options directly into the standard user interface. The result is a more capable mental model for tackling complex problems, from coding challenges to scientific inquiries and mathematical reasoning, available to anyone with a free ChatGPT account, subject to usage constraints.

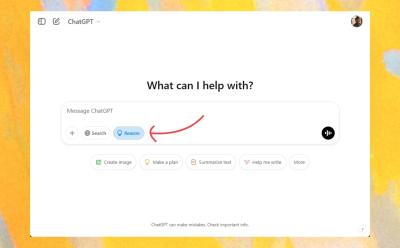

Overview: o3-mini and the new Reason button in ChatGPT

The o3-mini model represents OpenAI’s latest attempt to balance advanced reasoning capabilities with broad accessibility. It is designed to perform at a level that rivals or surpasses earlier free-tier offerings in many tasks, especially those that require multi-step deduction, structured problem-solving, and the synthesis of information from multiple sources. The introduction of the Reason button makes it possible to invoke this model directly from the standard chat interface, giving users a simple, discoverable path to engage higher-level reasoning without having to switch to a separate mode or command. The design philosophy behind this feature emphasizes a smooth user experience: you can access more capable reasoning without navigating complex prompts or settings, lowering the barrier to experimentation for students, professionals, and casual enthusiasts alike.

Important to note is that the o3-mini can be used in conjunction with web search results. This integration expands the model’s ability to pull in up-to-date information when needed, enabling more informed answers for questions that require current data, recent discoveries, or evolving standards. OpenAI also presents the o3-mini as part of its ongoing effort to offer a spectrum of capabilities across free and paid tiers. Free users enjoy access to the o3-mini at a moderate level of reasoning effort, while ChatGPT Plus subscribers receive access to the o3-mini-high variant, which consumes more computing resources and dedicates extra processing time to produce deeper analyses. This tiered approach aims to deliver differentiated experiences based on resource allocation and user needs, without completely restricting access to the most capable reasoning tools.

In terms of output style, OpenAI emphasizes that the o3-mini can display a sanitized version of its chain of thought. This means users can see the reasoning steps in a more readable, structured format, rather than a raw, unfiltered stream of internal deliberations. While this enhances transparency and educates users about how solutions are formed, it is important to recognize that the model does not reveal its raw internal output. The sanitization approach keeps the process interpretable while maintaining safeguards around sensitive or internal reasoning traces. It is also important to note that, at present, file analysis is not supported by the o3-mini reasoning model. Users can upload files to ChatGPT, but the o3-mini model cannot process or extract insights from these files yet, so users should plan accordingly when working with documents, data sets, or media. Finally, there is a practical daily cap: free users can send up to 10 messages per day under certain system-load conditions, which can fluctuate as servers scale to accommodate demand and capacity.

The practical implication of these features is that the o3-mini provides an accessible entry point into advanced reasoning for a wide audience, with a meaningful emphasis on balancing performance, cost, and user experience. For developers and researchers, this can serve as a baseline for evaluating how a mid-tier reasoning system performs on a broad set of tasks. For teachers and students, the model offers an avenue to explore complex problem-solving strategies in topics such as coding, science, and mathematics without immediate financial commitment. For professionals who rely on AI to brainstorm ideas or verify reasoning steps, the o3-mini represents a convenient, cost-effective option that supports iterative exploration and rapid testing. Yet, as with any evolving technology, users should manage expectations about accuracy, latency, and the potential mismatch between perceived capability and actual performance on edge cases or highly specialized domains.

This overview also underscores a critical operational detail: the o3-mini’s combination with web search means results can incorporate more recent information, but it also introduces potential variability depending on data sources and retrieval timing. The sanitized chain-of-thought display helps users understand how a solution was reached, which is valuable for learning and critique, but users should remain mindful that the output still depends on the model’s training data and current knowledge cutoffs. In addition, the current limitation around file analysis means workflows that rely on reading documents or datasets require alternate methods, such as summarizing content manually or using other tools in tandem. The balance of these features—free access with a rate limit, optional high-compute variants for paying users, web-search augmentation, and visible but sanitized reasoning traces—defines the practical utility of the o3-mini for everyday tasks, study, and exploration.

Use ChatGPT o3-mini for Free on the Web

For users who primarily operate through the web interface, OpenAI provides a straightforward pathway to access the o3-mini model on a free account. The process begins with opening the ChatGPT website and signing in using a standard free account. Once signed in, you will find the dedicated “Reason” button adjacent to the message composer. Activating this button switches the session into a mode that leverages the o3-mini model for the conversation, enabling more robust reasoning capabilities for your prompts. This setup is designed to accommodate a broad range of inquiries, including those that demand structured problem-solving, logic, and multi-step reasoning.

When engaging with the o3-mini on the web, users can request responses at a “medium” level of reasoning effort. This setting reflects a balanced approach to computational expenditure and response depth, offering a practical compromise between speed and thoroughness. For users who subscribe to ChatGPT Plus, there is an option to access the same o3-mini model but in a higher comfort tier—specifically, the o3-mini-high mode. This higher mode entails greater compute usage and longer response times, reflecting the premium tier’s capacity for more intricate analyses and deeper reasoning. The distinction between these modes helps maintain accessibility for casual users while providing a premium pathway for those who require deeper scrutiny or more nuanced conclusions.

A notable enhancement of the o3-mini experience on the web is the integration of web search into its reasoning process. This means that, when appropriate, the model can supplement its internal knowledge with live or recent information retrieved from the internet, potentially improving accuracy and relevance for questions that hinge on current events, evolving standards, or recent findings. In addition to this capability, OpenAI makes the model’s reasoning process more transparent by presenting a sanitized chain of thought. This approach allows users to follow the logical steps leading to an answer, which can be educational and diagnostically useful, while still protecting sensitive internal deliberations. It is important to reiterate that the chain of thought shown is sanitized, not the raw internal reasoning, and the presentation is designed to be accessible rather than an exact transcript of hidden processes.

Several practical constraints accompany the use of o3-mini on the web. First, file analysis remains unsupported by the current reasoning model, so while uploading files is possible in ChatGPT, the o3-mini will not process these files directly. This means users should plan to handle file-based tasks through alternative channels or separate tools designed for file interpretation. Second, there is a daily message cap for free users; depending on system load, you may be limited to around 10 messages per day. This cap may vary with demand and server capacity, so some days could see tighter or looser limits. Third, the reliability of results can vary, particularly for challenging prompts or ambiguous questions. While the o3-mini is designed to excel at coding, science, math, and reasoning tasks, it is not infallible, and some responses will contain inaccuracies or misinterpretations, especially in edge cases or highly technical domains.

To use the o3-mini on the web, you should follow a simple sequence: navigate to the ChatGPT website, sign in with your free account, locate the interface’s Reason button, and enable it. Once enabled, you can begin asking questions that require advanced reasoning, such as multi-step coding problems, algorithmic design questions, theoretical physics puzzles, or intricate mathematical proofs. The model’s ability to combine reasoning with web-search results can enhance the quality and timeliness of its answers, making it a valuable tool for students, researchers, and professionals who want quick, well-structured explanations coupled with credible references drawn from current knowledge.

For ongoing workflow enhancement, you can use the regeneration feature to switch back to different models if needed. After a response is generated, a drop-down menu becomes available from which you can select the o3-mini again. This makes it possible to compare the o3-mini’s output with other model configurations or with different reasoning settings without leaving the chat, enabling a practical, iterative approach to prompting and evaluation. It is worth noting that, in practical testing, the o3-mini at medium reasoning effort does not always match OpenAI’s o1 performance for every task. Nevertheless, the o3-mini can outperform some other free-tier options, particularly on more complex or structured problems, and may be superior to earlier free offerings on certain challenging tasks.

In summary, for users seeking to experiment with OpenAI’s advanced reasoning on the web without a subscription, the o3-mini offers a compelling combination of accessible pricing (free with rate limits), integrated web search, and a visually accessible sanitized chain-of-thought display. It provides a meaningful upgrade over simpler chat models for many tasks while still acknowledging that it is not a perfect substitute for higher-end paid models on every task. When you want to test or compare the o3-mini against other options, the regenerate feature is a convenient workflow that lets you switch between models within the same chat session, providing immediate side-by-side comparisons of reasoning and output quality.

Practical steps at a glance

- Open the ChatGPT website and sign in with a free account.

- Locate and click the “Reason” button to activate o3-mini.

- Start with prompts that involve coding challenges, science inquiries, mathematics, or other reasoning-intensive tasks.

- Consider using “medium” reasoning effort for a balanced experience; Plus users can opt for “o3-mini-high” for deeper analysis and longer thinking times.

- When needed, enable web search integration to augment responses with current information.

- View the sanitized chain-of-thought to understand the reasoning steps, while recognizing that the raw internal output remains hidden.

- Note that file analysis is not supported; plan accordingly if your task involves processing uploaded documents.

- You can send a limited number of free messages per day, with the exact limit subject to system load and capacity.

- After receiving a response, use the regeneration dropdown to switch to “o3-mini” again if you want to revisit the answer from a different angle.

Use ChatGPT o3-mini for Free on Android and iOS

Mobile platforms present a slightly different interaction pattern because the ChatGPT app on Android and iOS has not yet incorporated the explicit “Reason” button in the same way as the web interface. This requires a small adjustment to how users access o3-mini on mobile devices, and it highlights the ongoing evolution of model control across ecosystems. Although the absence of a dedicated Reason button on the mobile app may seem like a hurdle, it remains possible to leverage o3-mini’s capabilities through the process of regenerating responses and subsequently changing the model after the fact. This approach preserves the ability to benefit from higher-level reasoning while accommodating the current limitations of the mobile UI.

To use o3-mini on Android or iPhone, begin by launching the ChatGPT app on your device. Enter your question or prompt as you normally would, then run the prompt to generate the initial response. Once the response is displayed, you will need to access the option to change the model used for that response. On mobile, this is typically achieved by long-pressing the assistant’s reply to reveal a context menu. From this menu, select the option to “Change model” and then choose the o3-mini model from the available list. After confirming the selection, the app will generate a new response using the o3-mini model. This workflow, while requiring an extra step, enables mobile users to tap into the same advanced reasoning capability that is available on the web, ensuring a consistent capability across platforms even when the UI is not identically placed.

There are important practical considerations when using o3-mini on mobile devices. First, the user interface may present a slightly different set of options or may require more taps to reach the model-switching control, reflecting mobile design priorities. Second, the performance characteristics—such as latency and processing time—may vary on mobile devices due to device hardware differences, network conditions, and the mobile app’s resource utilization. Third, the sanitization of chain-of-thought remains visible in the mobile experience when available, offering users the same transparency about reasoning steps, though the exact formatting may differ slightly from the web version. Finally, the mobile approach inherits the same constraints as the web version regarding file analysis; you can upload files in ChatGPT, but the o3-mini model itself will not process those files at this time, so it is wise to plan your workflow to ensure file-based tasks are addressed with suitable tools or alternative methods.

The practical steps to access o3-mini on Android or iOS can be summarized as follows: open the ChatGPT app on your device, enter your question, and generate the initial response. Once the response appears, perform a long press or equivalent hold gesture on the reply to reveal the “Change model” option. From there, select the o3-mini model and confirm. The app will then provide a subsequent answer grounded in the o3-mini reasoning. This method ensures users on mobile devices can exercise advanced reasoning capabilities even in the absence of a dedicated mobile Reason button, maintaining parity with the web experience in terms of capability.

In addition to the above steps, users should manage expectations about mobile performance. The o3-mini’s reasoning depth and speed can be influenced by mobile-specific constraints, including network variability and the device’s processing resources. While the mobile route provides flexibility, it may occasionally yield longer wait times for complex prompts, particularly if the device is under heavy use or if the network connection is unstable. Still, for many users, the mobile path delivers a practical way to harness the o3-mini’s capabilities when away from a desktop or laptop, enabling continued exploration, learning, and problem-solving on the go.

Quick-mobile workflow recap

- Launch the ChatGPT app on Android or iOS and sign in.

- Enter a question and generate the initial response as you would normally.

- After the answer appears, long-press or hold the reply to reveal a context menu.

- Choose “Change model” and select the o3-mini model, then confirm.

- The app will deliver a response based on o3-mini reasoning, enabling complex questions to be tackled on mobile devices.

- Remember that file analysis remains unsupported by o3-mini, and if your task requires reading documents, use alternative tools or methods.

- Experience may vary with network conditions and device performance, so allow extra time for more involved prompts on mobile.

Use ChatGPT o3-mini for Free on Windows and macOS

For users who prefer working from a desktop environment, OpenAI provides a direct workflow to access the o3-mini model on Windows and macOS through the ChatGPT desktop application. The process is straightforward: launch the desktop app on your computer, then locate and click the “Reason” button to begin a session that uses the o3-mini model to respond to your prompts. This arrangement mirrors the web-based experience, delivering the same capabilities in a familiar desktop context and allowing users to take advantage of larger screen real estate and potentially more comfortable typing environments. By clicking the Reason button, you initiate the o3-mini workflow, enabling you to ask questions that benefit from deeper reasoning, structured analysis, and reasoning-assisted problem solving.

In practical terms, using o3-mini on Windows or macOS means you can pose challenging questions spanning coding, science, mathematics, and complex reasoning tasks, and expect the model to respond with extended, well-structured analyses. The desktop environment often provides a more seamless experience for long-form prompts or multi-step tasks, where the user may prefer to organize inputs, edit queries, and review outputs with easier navigation and possibly faster typing. The o3-mini on desktop also supports the same features available in the browser version—such as the mixed use of web search results to supplement the model’s internal knowledge, and the sanitized chain-of-thought display that helps users understand the reasoning process behind the answers.

In anecdotal testing, the o3-mini model, when operated at a medium level of reasoning effort, did not always match the o1 performance observed in the DeepSeek R1 versus o1 comparison. While the o3-mini fell short of o1 in certain tasks, it demonstrated a notable advantage over GPT-4o or GPT-4o-mini when confronted with challenging tasks that were previously difficult for free-tier users to handle. This performance pattern suggests that o3-mini provides a meaningful improvement for many real-world problems, especially those requiring multi-step reasoning, but it does not render the older or lower-power models obsolete. The practical takeaway is to consider o3-mini as a strong general-purpose reasoning tool on desktop platforms, particularly for education, programming, and scientific exploration, while maintaining awareness of potential limitations in extremely specialized domains.

From a usability perspective, Windows and macOS users can rely on the desktop app’s consistent layout, which mirrors the web experience in terms of features and capabilities. The ability to access the o3-mini via the Reason button on the desktop provides a stable, high-clarity environment in which to work through complex prompts, test hypotheses, and iteratively refine questions. The combination of a robust keyboard-driven workflow and a larger monitor can be especially beneficial when performing code debugging, mathematical derivations, or data analysis tasks that require careful step-by-step reasoning and frequent reference to intermediate conclusions. As with other platforms, you will find that the o3-mini can produce high-quality results in many cases, while occasionally producing errors on more challenging prompts. This variability underscores the importance of critical evaluation and validation when relying on AI-assisted reasoning for important decisions or precise calculations.

If you are curious about enhancing your desktop experience with o3-mini, you can explore how the model handles a range of prompts—from algorithm design and data analysis to theoretical questions and cross-disciplinary reasoning. The desktop workflow enables you to compare the o3-mini’s output with other models or configurations by using the regenerate option and switching models as needed. This can help you assess differences in reasoning styles, answer quality, and response times, supporting a rigorous approach to prompting and evaluation. The net effect is that Windows and macOS users have a reliable, powerful path to engage with OpenAI’s o3-mini on a device that emphasizes productivity and sustained concentration, without sacrificing the accessibility benefits designed into the free-tier experience.

Desktop-use essentials

- Open the ChatGPT desktop app on Windows or macOS and sign in.

- Click the “Reason” button to activate the o3-mini workflow.

- Pose questions requiring multi-step reasoning, coding, science, or math, and review the structured responses.

- Use the integrated web search when relevant to augment answers with current information.

- Observe the sanitized chain-of-thought display to understand the reasoning steps, while recognizing that the raw internal processes are not exposed.

- If needed, use the regenerate feature to compare different reasoning approaches or to switch back to o3-mini during the same session.

- Remember that file analysis remains unsupported for the o3-mini, so plan document-based tasks accordingly.

Performance, limitations, and practical takeaways

Across platforms, the o3-mini represents a meaningful advance in free-tier reasoning capabilities, offering access to more sophisticated problem-solving than many earlier models while maintaining reasonable resource use and responsiveness. In controlled evaluations and informal testing, the o3-mini at medium reasoning effort did not always match the o1 performance highlighted by higher-end comparisons like the DeepSeek R1 versus o1. This divergence is important for setting realistic expectations: the o3-mini excels in many practical contexts, particularly those that benefit from multi-step reasoning, but it is not a guaranteed substitute for every scenario that previously relied on o1 or other higher-powered models.

One of the most compelling advantages of o3-mini is its ability to combine reasoning with live web information. This synergy often yields more accurate or timely answers for questions that touch on current events, recent scientific developments, or dynamic standards. The sanitized chain-of-thought feature adds transparency, allowing users to follow the argument structure and verify steps without exposing the full depth of internal deliberations. This is especially valuable for learners who want to understand how to structure explanations or for professionals who need to audit the reasoning process. However, users should remain mindful of the sanitization trade-off: while it makes reasoning readable, it abstracts away some internal nuances that could be relevant in edge cases or when extremely fine-grained interpretability is required.

A practical limitation for all users is the absence of file analysis support in the current o3-mini configuration. While you can upload files to ChatGPT, the o3-mini model will not process or interpret them directly. This means that workflows heavily dependent on analyzing documents, datasets, or media files require supplementary tools or manual methods to extract actionable insights before engaging the model or after receiving its outputs. Additionally, the daily usage cap for free users—subject to system load—means that even productive sessions are bounded by availability, which can influence how you plan long-running tasks, iterative experiments, or batch prompts. These constraints, while meaningful, are part of the broader strategy to provide broad access to advanced reasoning while maintaining performance and stability for all users.

From a practical perspective, the o3-mini’s value lies in its balance of capability and accessibility. For students and educators, it offers an accessible way to explore complex problems with structured reasoning and a transparent demonstration of the steps involved. For developers and engineers, it provides a testbed for experimentation with reasoning strategies, prompt design, and schema-based problem-solving, all within a familiar chat-based interface. For researchers and professionals, the model’s ability to integrate web search makes it a useful tool for drafting ideas, evaluating hypotheses, and quickly reviewing related concepts without leaving the chat environment. The differences across platforms—web, Android, iOS, Windows, and macOS—reflect broader design choices aimed at delivering a consistent capability while accommodating device-specific constraints and user expectations.

In practice, when you approach a challenging prompt with o3-mini, you may notice that results are often quite strong for tasks like debugging code, outlining research plans, solving math problems with multiple steps, or proposing structured reasoning strategies. However, you should also anticipate occasional missteps or errors, especially for highly specialized or domain-specific topics. The model’s performance can be influenced by the nature of the prompt, the clarity of the request, and whether the task benefits from live data or theoretical reasoning. With this understanding, the best approach is to treat o3-mini as a powerful assistant for exploration and problem-solving, while maintaining an active critical stance—checking outputs, validating results, and refining prompts to guide the model toward the most reliable conclusions.

For users seeking to maximize the o3-mini experience, several best practices emerge. Begin with a clearly defined problem statement and a stepwise plan for solving it, then ask the model to outline the steps before diving into details. Use the sanitised chain-of-thought display to assess whether the model’s reasoning aligns with your expectations, and request clarifications or deeper dives into specific steps as needed. When possible, enable web search integration to improve accuracy on current facts or data points, and use the regeneration feature to compare responses from different configurations or to test alternate reasoning paths. Remember that the free-tier access is designed to be approachable and useful for a broad audience, so tailor prompts to practical tasks that fit within the daily message constraints while still challenging the model’s reasoning capabilities.

Key takeaways

- The o3-mini provides a free, accessible avenue for advanced reasoning with optional web-search support and a sanitized chain-of-thought display.

- Free users have a daily message limit, subject to system load; Plus users gain access to o3-mini-high with more compute time.

- File analysis is not supported by o3-mini, even though uploading files to ChatGPT is possible.

- The model’s performance is strong on many tasks but not guaranteed to match the highest-tier models in every scenario.

- The regenerate functionality allows users to switch between models within the same chat session, enabling convenient side-by-side comparisons and iterative prompting.

Conclusion

OpenAI’s introduction of the o3-mini reasoning model for free ChatGPT access, equipped with a Reason button and the ability to integrate web search, marks a meaningful step toward broader, more capable AI-assisted problem solving. The model’s design prioritizes a balance between accessibility and depth, offering visible yet sanitized reasoning to help users understand how conclusions are reached while preserving safeguards around internal processes. Across web, desktop, and mobile experiences, the o3-mini provides a consistent pathway to engage with higher-level reasoning, even as platform-specific limitations—such as file analysis support and mobile UI constraints—shape the exact workflow you will use. The combination of moderate free-tier access, the option to scale up via Plus, and the ability to compare outputs through the regeneration feature makes o3-mini a practical tool for coding, science, math, and general reasoning tasks. While it may not always achieve o1-level performance in every scenario, its enhanced capabilities relative to earlier free offerings—especially in challenging tasks—make it a valuable asset for learners, professionals, and curious minds looking to explore inquisitive prompts, test ideas, and iterate on complex solutions. As users experiment with o3-mini, they should monitor outputs critically, mix in external verification where appropriate, and take full advantage of features like web integration and the sanitized chain-of-thought to deepen understanding and refine prompts for future explorations.